Nginx Ingress Controller

Overview

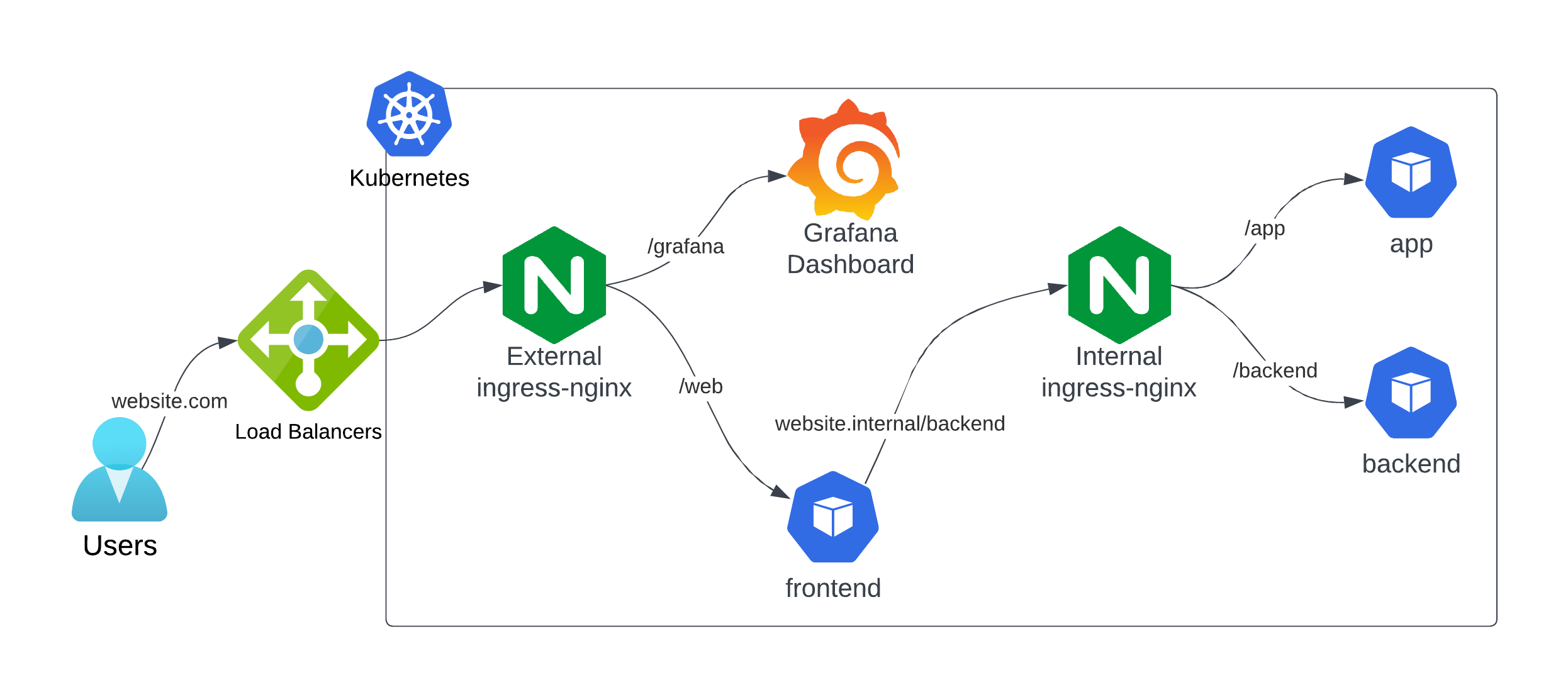

In the Kuberise project, we use two instances of the ingress-nginx controller: one for internal traffic and one for external traffic. This setup allows us to manage and route traffic efficiently within the Kubernetes cluster and to external clients.

Architecture Components

Internal Ingress Controller

- Namespace:

ingress-nginx-internal - Service Type: ClusterIP

- Ingress Class:

nginx-internal - Purpose: Manage internal microservice communication

External Ingress Controller

- Namespace:

ingress-nginx-external - Service Type: LoadBalancer

- Ingress Class:

nginx-external - Purpose: Expose services to external networks

Internal and External Ingress Controllers

Internal Ingress Controller

The internal ingress controller is used to route traffic between internal microservices within the Kubernetes cluster. It uses the nginx-internal ingress class and the kuberise.internal domain defined in the CoreDNS ConfigMap. Adding kuberise.internal to the CoreDNS ConfigMap is a convenient way to translate the internal domain to the internal ingress controller service in local clusters like minikube. In managed Kubernetes clusters, there is the possibility to use the internal DNS service provided by the cloud providers as well in combination with external-dns to make this configuration automatic.

External Ingress Controller

The external ingress controller is used to route traffic from external clients to the services within the Kubernetes cluster. It uses the nginx-external ingress class and is configured to handle external traffic securely.

CoreDNS Configuration in minikube

The CoreDNS ConfigMap includes a rewrite rule that connects the kuberise.internal domain to the service of the internal ingress controller. This allows internal services to communicate with each other using the kuberise.internal domain. In this ConfigMap only the line of rewrite name kuberise.internal ingress-nginx-internal-controller.ingress-nginx-internal.svc.cluster.local is added to the default CoreDNS ConfigMap of minikube. You can change internal domain name to any other domain name you want and don't forget to restart CoreDNS pods to apply new config. In Kuberise.io project, this ConfigMap is deployed automatically using raw resources folder.

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

log

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

prometheus :9153

hosts {

0.250.250.254 host.minikube.internal

fallthrough

}

rewrite name kuberise.internal ingress-nginx-internal-controller.ingress-nginx-internal.svc.cluster.local

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

Adding Ingress Resources

To add an ingress resource for internal services, use the nginx-internal ingress class. For external services, use the nginx-external ingress class.

Example of an Ingress Resource for an Internal Service Called Backend

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: backend

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

ingressClassName: nginx-internal

rules:

- host: kuberise.internal

http:

paths:

- path: /backend

pathType: Prefix

backend:

service:

name: backend

port:

number: 80

If you use templates/generic-deployment to deploy your services, the ingress resource will be created automatically. You just need to add these configuration in your values.yaml file:

ingress:

enabled: true

className: nginx-internal

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

hosts:

- host: kuberise.internal

paths:

- path: /backend

pathType: ImplementationSpecific

tls: []

There is a sample internal service called backend in the Kuberise.io repository

Example of an Ingress Resource for an External Service Called Show-Env

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: show-env

spec:

ingressClassName: nginx-external

rules:

- host: show-env.minikube.kuberise.dev

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: show-env

port:

number: 80

tls:

- hosts:

- show-env.minikube.kuberise.dev

If you use templates/generic-deployment to deploy your services, the ingress resource will be created automatically. You just need to add these configuration in your values.yaml file:

ingress:

className: nginx-external

If your microservice requires HTTPS in the pod, you can add the following configuration to your values.yaml file:

ingress:

className: nginx-external

annotations:

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

cert-manager.io/cluster-issuer: "selfsigned-clusterissuer"

tls:

- secretName: "frontend-https-tls"

hosts:

- '{{ include "generic-deployment.fullname" . }}.{{ $.Values.domain }}'

There is a sample external service called show-env in the Kuberise.io repository.

Also a sample external service that uses HTTPS in the pod called frontend-https in the Kuberise.io repository.

Ingress Class and Controller Class

By default, deploying multiple Ingress controllers will result in all controllers simultaneously racing to update Ingress status fields of Ingress resources in confusing ways. To solve this issue, we use different ingress classes and controller classes for each ingress controller.

Internal Ingress Controller Configuration:

controller:

service:

# The type of service for the ingress controller. ClusterIP means the service is only accessible within the cluster.

type: ClusterIP

ingressClassResource:

enabled: true

# The name of the IngressClass resource.

name: nginx-internal

default: false

# The controller value for the IngressClass, used to associate Ingress resources with this controller.

controllerValue: "k8s.io/ingress-nginx-internal"

ingressClass: nginx-internal

# Specifies whether the ingress controller should watch for Ingress resources without an ingress class.

watchIngressWithoutClass: false

electionID: ingress-controller-leader-internal

ingressClassByName: true

External Ingress Controller Configuration:

controller:

extraArgs:

default-ssl-certificate: cert-manager/wildcard-tls

ingressClassResource:

enabled: true

name: nginx-external

default: false

controllerValue: "k8s.io/ingress-nginx-external"

ingressClass: nginx-external

watchIngressWithoutClass: false

electionID: ingress-controller-leader-external

ingressClassByName: true

Testing Internal Traffic

To test internal traffic, you can get a shell from a container and send a request to another pod using the kuberise.internal domain.

Example: Testing Internal Traffic

- Get a shell from a container:

kubectl exec -it <pod-name> -- /bin/sh

- Send a request to another pod using the

kuberise.internaldomain:

curl http://kuberise.internal/backend

- Verify that the internal service is not accessible from outside the cluster even if you use the correct domain:

curl -H "Host: kuberise.internal" http://127.0.0.1/backend

# should return 404 Not Found

This test ensures that the internal service is inaccessible from outside the cluster, not due to domain name translation, but because of the internal ingress controller configuration and its separation from the external ingress controller.

This will send a request to the internal service through the internal ingress controller, allowing you to verify that the internal traffic routing is working correctly.

By following these steps, you can efficiently manage and route both internal and external traffic in your Kuberise project using the ingress-nginx controllers.

Additional Resources

- For more information, please refer to the official documentation of ingress-nginx

Platform Tools Guide

Kuberise.io comes with a variety of tools that can be managed and configured through our values files structure. These tools help in managing, monitoring, and operating your Kubernetes clusters.

ArgoCD Image Updater

ArgoCD Image Updater automates updating container images in Kubernetes applications managed by ArgoCD. It detects new image versions and updates Kubernetes manifests, ensuring applications run the latest versions. This is especially useful in dynamic development environments with frequent updates.